Virtual Point Light Bias Compensation

Nothing new here, just highlighting the 2004 paper Illumination in the Presence of Weak Singularities by Kollig and Keller. This paper presents a simple and elegant solution to the problem of clamping the geometry term when using virtual point lights for illumination.

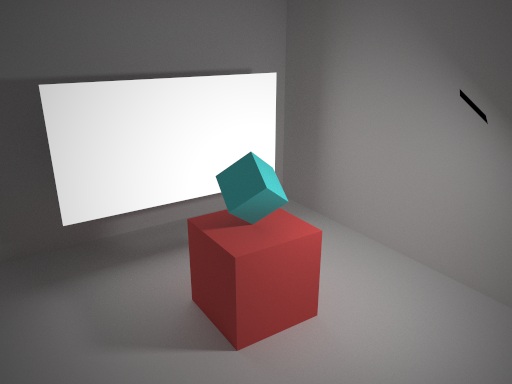

Consider the following scene with two area lights (in this case rendered using a reference path tracer):

Let's consider the standard (light tracing) instant radiosity algorithm. In this, we basically repeat the following until the image is acceptable:

- Trace a light path through the scene, creating a virtual point light at each vertex

- Render primary rays from the camera to get the first intersection point for each pixel

- At each pixel, evaluate and cast a shadow ray to each virtual point light, accumulating the result

There are extensions to optimise this using interleaved sampling of VPLs, and you can of course shoot primary rays as a pre-process if you have fixed anti-aliasing/depth of field, but that is the basic algorithm.

The sharp shadows smooth out very quickly as passes are accumulated, but we have very noticable singularities when the sample point is close to a virtual point light (VPL). These show up as bright spots in the image and take a very high number of passes before they smooth out. Here's the same scene rendered using this algorithm, the shadows have softened but the singularities are clearly visible:

To work around this problem, we clamp the geometry term between the sample point and the VPL. Recall that the geometry term G is defined as follows (assuming that the vertices x and x' are visible to each other):

Our clamped geometry term G' is defined using some minimum value b:

Setting b to 0.1 and re-rendering the scene yields the following output:

As expected, our bright pixels have disappeared, but we're left with two issues:

- We no longer converge to the same final image (corner areas are darker, almost like an ambient occlusion effect).

- We had to choose a value for b.

The first issue is a problem if you are using a virtual point light renderer for preview, but your final render is done using a different algorithm, because a preview tool is not very useful if it shows different results. The second issue is a problem because the optimal b (one that removes just enough energy to hide the singularities) will vary with light path density in the scene, and exposing a parameter for something generally means it will be set to something very inoptimal. :)

Let's just focus on the first issue. The paper by Kollig and Keller tackles this using the following technique: continue the eye path to recover the lost energy.

This is very simple to implement: after evaluating our VPLs we now sample the BSDF and continue the eye path. In the case where we hit some geometry, we check the following term to see what proportion of the VPL energy along this path is lost by clamping. This is:

If k is zero then we are done, there is no more energy to gather. If k is non-zero, we scale future contributions to this pixel by k, and recurse: evaluate our VPLs at this new sample point then sample BSDF to continue the eye path. In this way, all the energy lost by clamping the VPLs is eventually gathered by the eye path.

(A good choice of b usually defines a maximum ray distance that is very short, so rays spawned by this method tend not to hit anything, terminating the recursion very quickly.)

Implementing this algorithm for our example scene yields this result, which now converges to the same image as our path traced reference.

Here is a difference image (multiplied by 4) that shows where the eye path recursion happened.

There are of course more interesting ways to generate VPLs than light tracing, so here are a couple of links:

- Bidirectional Instant Radiosity by Segovia et al.

- Metropolis Instant Radiosity by Segovia et al.

And there are alternative methods of doing bias compensation. Here are a couple of recent papers I liked, that do the bias compensation in screenspace:

- Screen-Space Bias Compensation for Interactive High-Quality Global Illumination with Virtual Point Lights by Novak et al.

- Instant Multiple Scattering for Interactive Rendering of Heterogeneous Participating Media by Engelhardt et al (intentionally the same link as previous).